Threshold Which Gives You the Best F1_score

A point in the top left of the plot. In pattern recognition information retrieval and classification machine learning precision and recall are performance metrics that apply to data retrieved from a collection corpus or sample space.

The intersection over union threshold with the ground truth labels above which a predicted bounding box will be considered a true positive.

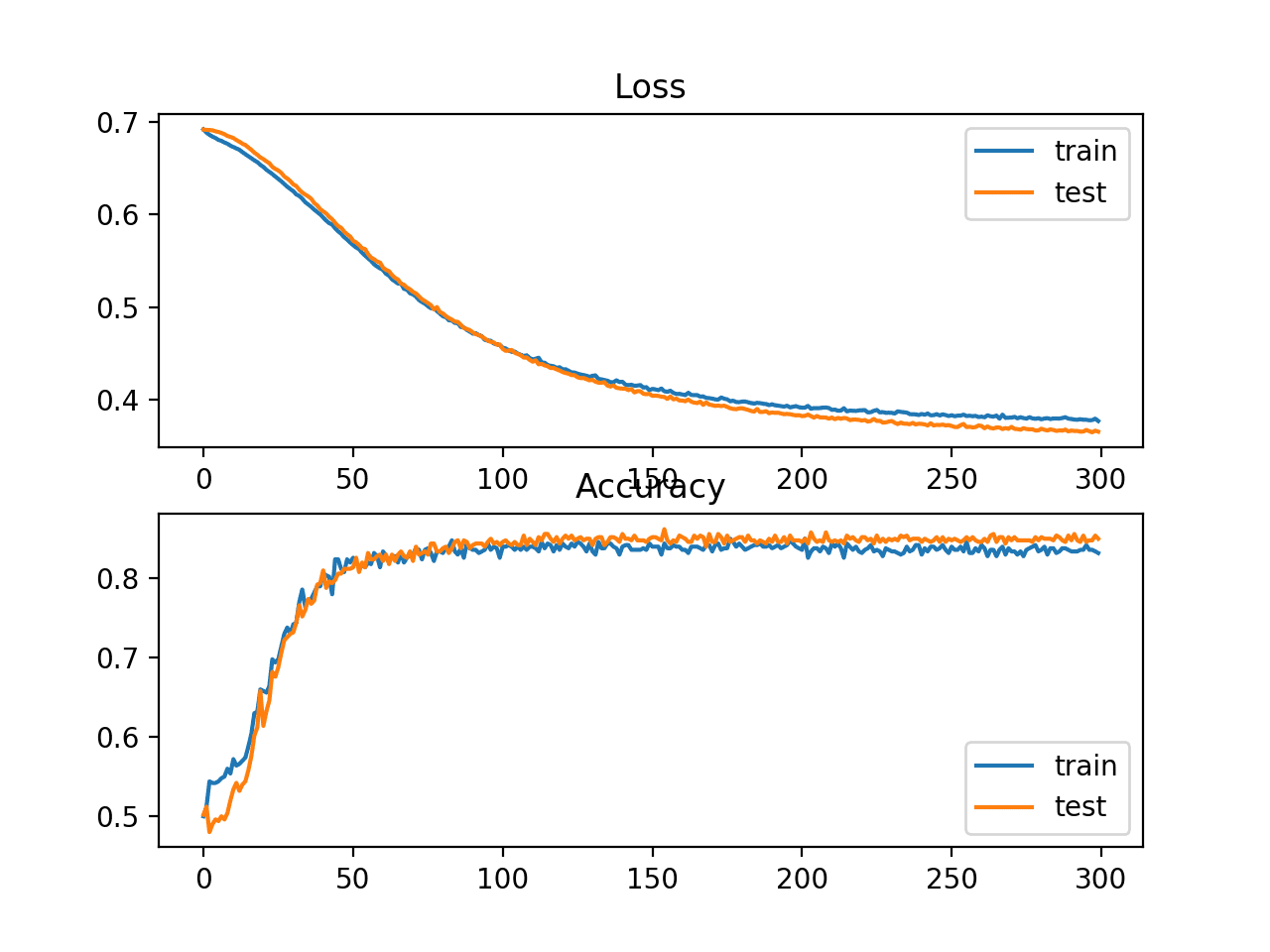

. If you give very high-class weights to the minority class chances are the algorithm will get biased towards the minority class and it will increase. XGBoost Feature Importance Bar Chart. Mapping these predicted probabilities hatp 1-hatp to a 0-1 classification by choosing a threshold beyond which you classify a new observation as 1 vs.

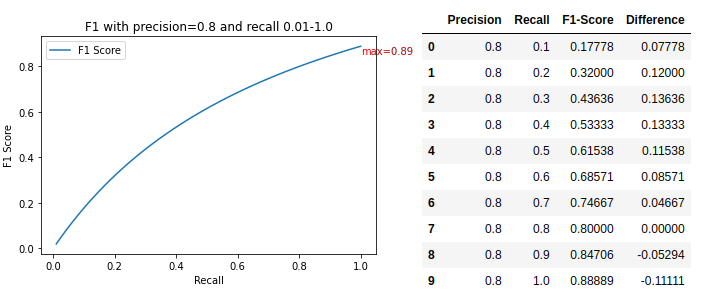

F1 score on Wikipedia. Principal Component Analysis PCA. The threshold is applied to the cut-off point in probability between the positive and negative classes which by default for any classifier would be set at 05 halfway between each outcome 0 and 1.

And here you need the probabilistic output of your model - but also considerations like. As per analysis the two neurons hidden layer PNN gives the accuracy 09887989. You can see that features are automatically named according to their index in the input array X from F0 to F7.

Precision also called positive predictive value is the fraction of relevant instances among the retrieved instances while recall also known as sensitivity is the fraction of relevant. Here we get the optimal threshold in 03 instead of our default 05. Manually mapping these indices to names in the problem description we can see that the plot shows F5 body mass index has the highest importance and F3 skin fold.

Sensitivity also known as the true positive rate TPR is the same as recall. It is part of the decision component. F1 score is a more useful measure than accuracy for problems with uneven class distribution because it takes into account both false positive and false negatives.

Enter the email address you signed up with and well email you a reset link. Thanks for the reading. I hope this article gives you an idea about some of the methods which can be used while handling imbalanced dataset.

The best value for f1 score is 1 and the worst is 0. There is a threshold to which you should increase and decrease the class weights for the minority and majority class respectively. Using linear algebra and must be searched for by an optimization algorithm.

F1-score is considered one of the best metrics for classification models regardless of class imbalance. ROC and precision-recall with imbalanced datasets blog. In this tutorial you discovered ROC Curves Precision-Recall Curves and when to use each to interpret the prediction of probabilities for binary classification problems.

Its best value is 1 and the worst value is 0. Gradient descent is best used when the parameters cannot be calculated analytically eg. Precision recall f1-score support 0 098 098 098 11044 1 081 079 080 889 accuracy 097 11933 macro avg 089 089 089 11933 weighted avg 097 097 097 11933 Best Treshold.

You can vote up the ones you like or vote down the ones you dont like and go to the original project or source file by following the links above each example. In python F1-score can be. 4 How do you combat the curse of dimensionality.

Fit epochs 10 lr None one_cycle True early_stopping False checkpoint. Any suggestion towards the content is. If False returns class-wise average precision otherwise returns mean average precision.

Which gives 0881 1000 as output. Hence it measures. 3 Explain over- and under-fitting and how to combat them.

10 In this case the best threshold is 1 thus the threshold technique behaves as the imbalanced case. The three neurons hidden layer PNN gives the accuracy 09895990. The five six and seven neurons of hidden layer PNN performs 994 998 and 999 relatively.

This highlights that the best possible classifier that achieves perfect skill is the top-left of the plot coordinate 01. Running the example gives us a more useful bar chart. Dict if mean is False otherwise float.

F1-score is the weighted average of recall and precision of the respective class. You may also want to check out all available functionsclasses of the module sklearndatasets or try the search function. PNN four neurons hidden layer process some better accuracy that is 09915991.

0 is not part of the statistics any more. The best value of recall is 1 and the worst value is 0. You may check out the related API usage on the sidebar.

To check the performance of the model we will be using the f1 score as the metric not.

How To Calculate Precision Recall F1 And More For Deep Learning Models

A Look At Precision Recall And F1 Score By Teemu Kanstren Towards Data Science

Comments

Post a Comment